Surviving Windows Development Chemotherapy

- development

- windows

- devex

- technical

Windows is still the desktop OS of choice for enterprises across the globe yet the vast majority of server operating systems are either Linux or BSD. Only a small contingent of server installations are Windows Server most of which host Microsoft specific applications such as Exchange. Doing development for Linux destined applications on a Windows desktop can be a huge chore, especially when you don’t have admin access to cheat your way via WSL or super user permissions.

Here’s a survival list that I’ve cobbled together covering both admin and non-admin setups.

The Ranges ¶

My best case scenario is that I use my own device(s). They have preconfigured backups, connectivity, firewalls and abundant monitor space. They also have full command and network access to my own development clusters. I often use my own infrastructure to test out solutions for clients, from fresh ForgeRock installations to using my graphics hardware for fine tuning PyTorch deep learning models.

When I can’t use my own machine I’ll be issued with a laptop, ranking from best to worse:

- A Linux machine (this has never happened)

- A Mac

- A Windows machine with admin

- A second hand 1970s toaster

- A Windows machine without admin

My professional opinion is that Windows without admin access is not suitable for full stack development. It can be done, but it is so much worse that it’s crippling.

Windows machines with admin access still lag Linux native environments, though this is improving with Windows Subsystem for Linux, VS Code and other development friendly initiatives. However, whilst Microsoft were building a code editor, Linux built kernel-level multiple-namespace process isolation via containerisation, which is fantastic for managing the layers of crap in modern technologies. That level of process management and control is still foreign to Windows, so developers end up leaning on VMs and other substitutes.

Development Environment ¶

Whether you have admin access on your Windows machine or not, install VS Code. I’ve found VS Code a huge cheat for managing simple development tasks as it comes preconfigured for Windows. You don’t have to mess with git, extension installation paths or permission issues. Microsoft VS Code works well on Windows, which is honestly a welcome surprise.

Admin ¶

If you’re an admin then you should probably activate Windows Subsystem for Linux and give it a test run. Doing so should give you enough functionality to install and run what you want, possibly with some workarounds.

If WSL falls short, just run a VM. Running a VM will allow you full control to do almost everything from inside the VM, especially if you take advantage of proper configuration such as Virtual Box’s directory mounting.

Whilst you could develop directly inside the VM, the likelihood is that your peripheral performance will be poor with slow desktop rendering, input lag and other issues. To combat this you interact with your desktop environment - ie. VS Code - on the Windows side and have your development directories mounted inside the VM and SSH-ed into for compilation purposes.

Mounted directories are going to be slower and incompatible with some Linux operations, such as Docker mounting. A workaround is to provision unmounted mirrored directories that exist solely inside the VM where code can be copied to, built, run and the resulting artefacts copied back. This is ugly, but at some point your code will need to be packaged into a container and exported. At least with this method you can run a full build locally. Also, as you’re running a VM you can put together local-to-the-VM scripts that handle directory copying and alias them inside of the VM terminals. Simple copy; cd; docker build; copy; rm; cd procedures.

Non-admin ¶

Install VS Code and git utilities. For any UAC popups you can generally click cancel and the installation will then default to user level installs without admin.

For run-times, you’ll be installing via downloaded MSI packages and clicking through Windows installation prompts. For example, installing python is going to python.org, pressing the download button and clicking through prompts like a goofball. Whilst there’s package managers for Windows like chocolatey, you’re probably setting yourself up for disaster using them as a non-admin:

- If you hit issues, there’s a wealth of options you just can’t use as a non-admin. Separating the non-admin solutions from the admin enabled solutions is a substantial time sink

- If a business isn’t trusting you with local admin I’d wager installing a package manager is going to really set them off

- If the package manager is forcibly removed then everything that has been installed is going to be caught in the blast radius. If you have a problem with your Python install, then it’s limited to your Python installation. If you have a problem with your package manager then it’s going to affect everything you’ve ever installed or configured with that package manager

If you’re good to tackle the package manager route then go for it, I prefer the boring route of installing run-times individually so I don’t have to deal with horrid disasters later.

The same UAC cancellation method should switch most installers to user only. My experience is that they all try admin first then fall back to user level when UAC prompts fail / are cancelled.

A note on JS and other packages ¶

When installing python you’ll get pip. When installing Node JS you’ll get npm. Remember, we’re installing raw here so those installations have access to everything your user has access to.

As a general rule I like to know my supply chain to minimise the avenues from problems. That doesn’t mesh with some ecosystems and especially with the JavaScript ecosystem which is a verified problem factory. I would highly recommend a review of every package you’re planning on using on Windows to see what’s going to have access to your system. Docker can’t save you on Windows.

Terminals ¶

Admin ¶

By now you should have WSL running or you’ve shelled into your VM and running commands from there. Either way you’re good to use whatever terminal you like.

Non-admin ¶

PowerShell is it’s own beast. PowerShell is not a Unix shell, it’s a Windows shell built for Windows tooling. Therefore, PowerShell is absolutely not a drop-in replacement for Unix-style shells. If this Windows adventure is just a bump in the road it’s not worth scaling the learning curve for PowerShell and instead best to use something else.

Most Windows users I know use PuTTY, however their use cases are that they’re only running remote shells and never run local commands. For that use case PuTTY is totally fine, but be aware that PuTTY doesn’t do local shells. Maybe you can remote into your own machine using an external interface, however, that will require running an SSH server. Once again, if you’re not allowed admin access then loading an SSH server and busting it open is probably going to set off some alarm bells. Plus you’ll need to handle the configuration of the server, running it as a background task and more.

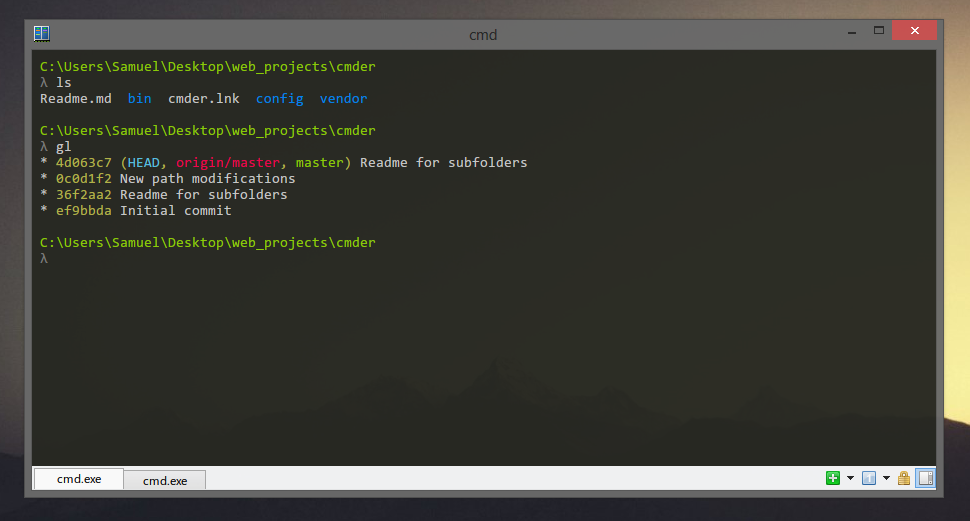

I settled on cmder. It looks like a modern day terminal and mostly runs like a terminal, however it does come with some non-Unix behaviours. It does offer tiling, tabs and its own version of aliases which are all day-to-day necessities. Most importantly, cmder comes as a standalone package where the binary is packaged with all relevant artefacts in a single directory and can be run from almost anywhere. This is best in class packaging.

Not all Unix aspects will port directly to cmder, but cmder includes enough mechanisms to get by. For example, having an SSH configuration file is brilliant for reducing connection configurations to a phone book of regular servers, eg. ssh localserver loads in the full connection configuration for host localserver as defined in your $HOME/.ssh/config file. I couldn’t get SSH configuration files reading at all in Windows, although I was able to achieve the same functionality by hard-coding each server’s equivalent command as a cmder alias. It’s non-standard and uglier, but it works.

No matter the terminal, running any command is much, much slower than Unix environments. I don’t know if this is Windows being crap or corporate laptops being loaded with bottom barrel software, but sluggish terminal usage has been the norm in my Windows experience.

Weirdly enough the last Windows 11 upgrade I experienced improved speed and performance. I don’t have any explanation for this so who knows what you may experience.

Environment Variables ¶

Environment variables are handled via System Properties for global variables (requires admin) and Environment Variables for user environment variables. Each of these is controlled via a GUI. I can’t tell you which GUI as Microsoft keep changing the location but you can access either directly via search until they “improve” that too.

I’ve never had updated Environment Variables reliably populate through to running or restarted applications when updated or added. I’ve regularly had to restart the entire machine to populate the variables. Restarting the whole damn thing is a Windows trait you’ll need to embrace.

Fundamentals ¶

Given the restart aspect and the complete unreliability of variables to update compared to Unix environments, it’s been hugely valuable to use a predictable and clear directory structure. For example:

- Have a

custom-binariesdirectory in your home that is always on your PATH. Any downloaded binaries can be dumped here without needing an update and a restart - Do the same with user level run-time installations. For example, making a

pythondirectory in your home directory lets you dump custom scripts or either the whole python installation into a obvious tree. Now you can set yourPYTHONPATHonce and not have to restart every time you install a new tool

Windows Environment Variable keys are case insensitive, so Path == PATH == path. I have no idea why but this is worth remembering whilst reading grammatically ambiguous stack overflow comments.

Git ¶

Git I install directly via the git MSI. I go for the full install so a bunch of Unix-y binaries such as ls, mkdir & co are also installed. You also get git-bash which is a kind of terminal that can fit some use cases in a pinch.

Mostly, I use the built in VS Code source control tab. This avoids having to deal with clunky and slow terminal emulators with a better interface. This only really works with simple stage, commit, push workflows, but if something needs rebased then you can use git-bash.

Files ¶

There’s a long list of use cases where I don’t bother attempting to run code locally - it needs to get shipped off to a good OS. This list is magnitudes longer in a non-admin setup. With ssh configuration and the clunky nature of Windows terminals I just use FileZilla. It’s old but it’s dependable and it doesn’t try to fight Windows by being something Windows doesn’t like, ie it’s a GUI application that doesn’t need admin for installation.

FileZilla will handle FTP, SSH and other common connections and has a mature enough interface to allow for key and server management, port settings, key settings and most use cases. I’ve not hit a situation where FileZilla couldn’t do what I needed it to do, however, I haven’t had to use FileZilla with things like NTLM authorised bastions.

Local Scripting ¶

Most repositories I work in end up with some level of scripting to allow for automating per-project tasks. For example, this blog has a spell checking script that I don’t want in my deployment pipeline but I do run often enough that it’s worth automating.

Admin ¶

Write your scripts as usual and run them out of the VM or WSL. I wouldn’t advise maintaining Windows specific scripts if you can run Unix scripts in a Unix environment.

Non Admin ¶

There’s a couple of ways to deal with this. The first is to run simple commands via a .bat file. I hesitate to call it a script as you’ll likely include hard-coded commands without any conditional logic or arguments. Use cases would include development environment commands that take hard-corded parameters. For example, a python flask server with SQLite database in the db/ directory would be:

$ cat .\quickrun.bat

py.exe main.py -db sqlite:./db/database.sq3

Once the commands get more complicated this pattern falls apart. One approach is to use basic scripting skills in Python / JS / etc that have cross OS path handling functions in their basic libraries. This means it’s quite simple to write a script that will work on both local Windows and a Unix OS.

$ cat quickrun.py

import os

import sys

cwd = os.getcwd()

databasePath = os.path.join(cwd, "db", "database.sq3")

if sys.platform == "Windows":

print('Windows: ' + databasePath) # Windows => C:\Users\someguy\proj\db\database.sq3

else:

print('Unix: ' + databasePath) # Unix => /home/someguy/proj/db/database.sq3

Path management is the primary issue and control of path calls in the above manner handles this. As you might spot though, the above path building is completely different to the standard pattern found in most code bases that take Unix style paths as standard. Some software, including my own, doesn’t bother to support Windows. Once you lose control of the full path management and location by utilising packages or imported code it’s possible that your scripts won’t work on Windows.

I’ve found this to be less of a problem with JS scripts, including those often found and packaged in package.json files but I do find a lot of issues with Python libraries. For example, Ansible won’t run on Windows and it’s one of the oldest and most established Python projects that I can think of. Random crap from npm works just fine. Windows often has me encountering things that should not be.

I still wouldn’t recommend using most stuff on npm, but if it’s a widely trusted project with a sensible supply chain and it works as a npx call in Windows, then happy days.

Exceptions ¶

Most of this article won’t apply in wholly Windows / Microsoft end to end environments. This is where the user machines are Windows, the servers are Windows and there’s absolutely no non-Windows environments involved. I’ve never worked in environments like this as the vast majority of production services and systems run on Linux boxes.