Should it cloud?

I know it does, when it wants to...

- cloud

- unhappy

- architecture

- cloud

I’ve been wrestling a horde of Macs, herding them into a flock of standardised, well trained beasts that are able to handle iOS builds in a somewhat predictable pattern. It’s not my favourite experience but, like mortality, I can see the light at the end of the tunnel. In order to get to this point I’ve fallen back on some old standbys like Ansible and Bash.

When I label each of these tools as an ‘old standby’, I explicitly mean old. The version of bash bundled with Macs was released in 2006. Ansible has been around over a decade. Neither of these are going to be getting degenerate levels of VC investment. However, they work and they work wonderfully. When I bust open Ansible or Bash I know exactly what I’m going to get.

With the Macs beaten back for the day, I had enough energy to deploy out a quick site as a tick box exercise. I’ve got my own Terraform module library, pre-built docker images, a custom project template and enough pre-written, tested and deployed code to do most of the job quickly and effectively. There’s nothing blocking the release of a one page site risking the deterioration of my mood, patience and general satisfaction with how I’m expending the wasting seconds of my existence.

Twenty minutes after first initialising the project I’m ready to deploy. I fire off Terraform and get my first error, the module I wrote was labelled for version 1.2.2 whereas my latest Terraform binary is 1.4.2.

Being in the process of slowly dying, I’m short on time so I simply utilise an old version of Terraform. Having the option to do that is a wonderful thing. Unfortunately, after downgrading to the aged, 10 month old 1.2.2 version the following error vomited onto my terminal.

╷

│ Error: error creating S3 bucket ACL for mystery.site: AccessDenied: Access Denied

│ status code: 403, request id: XXXXXXXXXXXXXX, host id: XXXXXXXXXXXXXX

│

│ with module.site.aws_s3_bucket_acl.acl,

│ on .terraform/modules/site/full_service/static_sites/v1.0/main.tf line 51, in resource "aws_s3_bucket_acl" "acl":

│ 51: resource "aws_s3_bucket_acl" "acl" {

│

╵

╷

│ Error: Error putting S3 policy: AccessDenied: Access Denied

│ status code: 403, request id: XXXXXXXXXXXXXX, host id: XXXXXXXXXXXXXX

│

│ with module.site.aws_s3_bucket_policy.policy,

│ on .terraform/modules/site/full_service/static_sites/v1.0/main.tf line 69, in resource "aws_s3_bucket_policy" "policy":

│ 69: resource "aws_s3_bucket_policy" "policy" {

│

╵

╷

│ Error: Missing required argument

│

│ with module.site.aws_cloudfront_distribution.s3_distribution,

│ on .terraform/modules/site/full_service/static_sites/v1.0/main.tf line 169, in resource "aws_cloudfront_distribution" "s3_distribution":

│ 169: resource "aws_cloudfront_distribution" "s3_distribution" {

│

│ The argument "origin.0.domain_name" is required, but no definition was found.

╵

That’s both weird and uninformative. I used the same module to build southall.solutions a few months ago without these errors.

Something has changed between the start of 2023 and today that has completely borked a custom Terraform module that has previously rolled out over half a dozen sites. And when I say borked I mean there’s a load of Access Denied errors for an AWS S3 bucket this very code just created.

I’ve got no choice. I’ll have to log into AWS and find out what’s going on. Rather than getting on with my life, I’ll have to work out what these idiot systems are actually trying to say. I don’t see anything out of the ordinary so I copy paste my error into DuckDuckGo and find something called bucket ownership controls. Bucket Ownership Controls. Apparently S3 previously wasn’t up to the task of controlling bucket ownership so more bucket ownership controls were required. And then forcefully mandated.

As I’m deploying out a public website, I clicked on some settings and turned whatever this was off and then completed the rest of the deployment. I don’t need security for a public site, that’s why I’m using S3 in the first place.

Then I thought a little.

Should I refactor the module?

Are the other five or six sites using this module now broken?

There’s a whole new Terraform resource to implement, so how is that handled in the statefile of previously deployed buckets?

Is this worth it?

The answer is no. I’m bored of playing issue tennis over trivial nonsense with the various entities in modern technology. I recall around a year ago Hashicorp rolling out breaking changes for all S3 buckets as part of their AWS provider 4.0 change. This year AWS has rolled out another “feature” that blocks my ability to apply security policies to a bucket with nothing in it. Thanks Amazon for ensuring that my nothing is secured. I appreciate the additional layer of security on top of the whole of AWS Identity and Access Management tooling, actual S3 bucket policies themselves and the already automatically applied “No public object” policies.

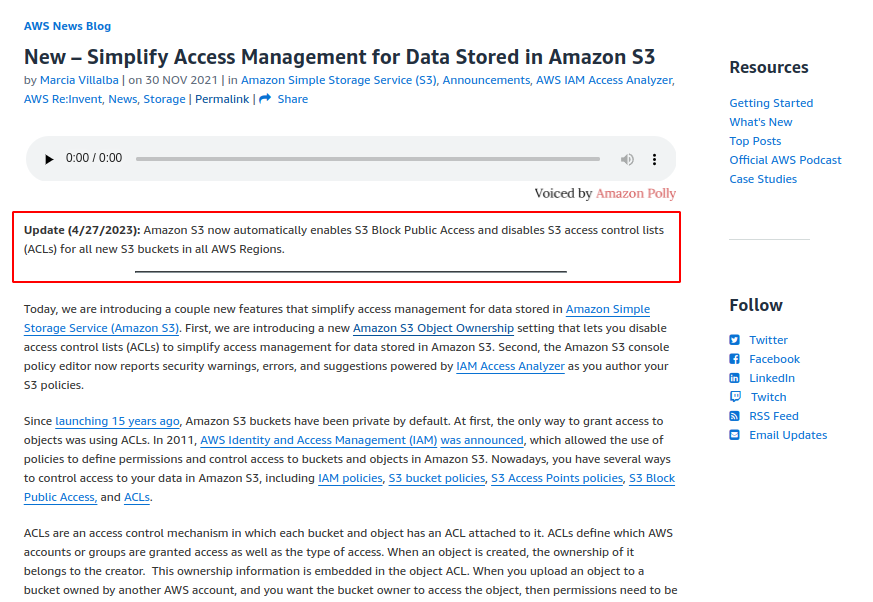

Here’s the relevant AWS Blog post providing headline details. Did you spot the bit where they announced this breaking change moving forward? It’s the literal small print under the title added five months after the initial post. This was rolled out on 27th April 2023 which is why my stuff is broken today.

As a customer I don’t understand the need for it, I just know that I’m paying the consequences. Let’s be frank here, this sort of crap is draining. Products that drain their user-base tend to die out.

Aside from the rant ¶

Obviously I’m not too pleased about this turn of events, but what’s the lesson for normal people whose work depends on cloud services?

If you’re utilising cloud provider services that are not standardised then you’re buying SaaS, SaaS that that provider can muck around with as they like.

What counts as standardised? Realistically, it’s probably only virtual machines and DNS. Database services might qualify but it’s easy to break out of their intended happy path. S3, despite the widespread use, is getting tweaks on a per provider basis, such as the one above with AWS.

Kubernetes services are better but they still have limitations and tweaks placed on them via the Cloud provider. What can work in one cloud might need changes in the other. Loadbalancer type services and volumes are the common culprits.

My now broken Terraform module only utilised Cloudfront, Route53 and S3. I’d be amazed if AWS breaks Route53 in the future but I’ll consider the other two services fair game.

If you have a cloud provider in your business it probably requires having an MSP or staffing arrangement to cover these sorts of changes. On a personal basis, I’ll move more of my work to Ansible. I’ve not been a fan of the statefiles that both Terraform and Pulumi use and in the long term I don’t think the statefile concept is compatible with low maintenance and cloud provider changes.

On the other side of things, there are providers who are potentially less motivated than AWS to roll out obnoxious changes. In the above remit I’m operating as an SME and AWS is probably the wrong platform. AWS cares about enterprise who in turn care about piling on more process, checks and balances to minimise the blast radius of employee and third party screw ups - enterprise risk appetite is difference from SME appetite.

I’ve found Digital Ocean to be less prone to changes and much more straightforward for the SME case of light, fast and reliable. You can see this in the pricing strategy, AWS is a convoluted mess of ‘it depends’ costing whereas Digital Ocean gives you the exact costs up front. The future for my SME enterprises likely holds a Digital Ocean VM, NGINX and a bunch of directories.

So for now:

- Route53 is OK, never had a problem and happy to continue using

- AWS S3 and Cloudfront I’m classifying as prone to breaking changes. I’m scheduling in a move to a different options

NGINX was first released in 2004, which is worth mentioning.