Infrastructure as Code

don't let broken mice block your deployments

- technical

- cloud

- code

- technical

- cloud

I’ve been on another loop around Infrastructure as Code tools, initiated by multiple explorations hunting for the appropriate tool to fit various clients culture and strategy. All of the below tools I’ve taken for a full run deploying to production, ie fully released commercial software has been deployed by all the tools below directly by yours truly. I thought it best to note down the impressions of each whilst I’ve still got the wounds.

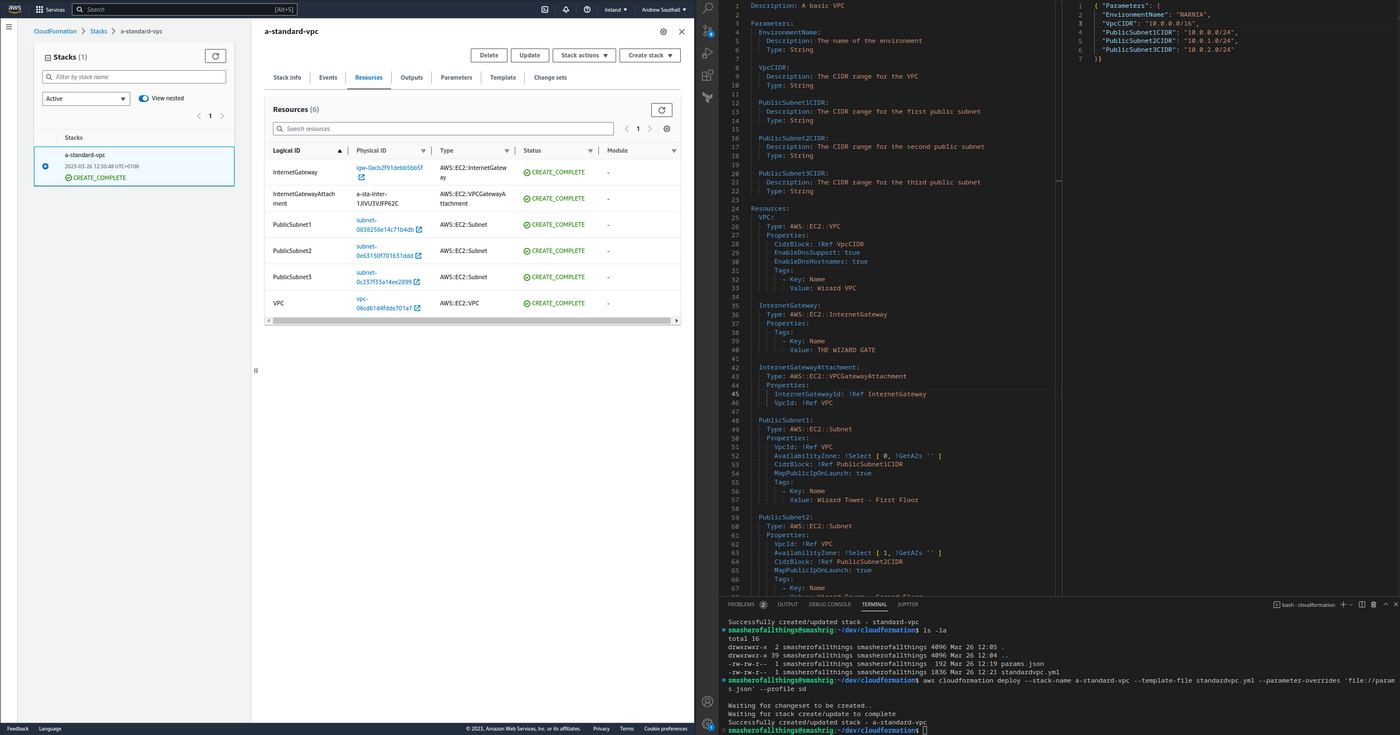

Cloudformation ¶

Most of my cloud work for clients takes place on AWS which is generally the right choice for reasons that I would cover elsewhere. Cloudformation is AWS’ home-brewed IAC offering to organise the myriad of things that you rent from Bezos. Given that it’s AWS’ own tool and it’s both accessible and supposedly supported by AWS, the strategy from the department was to keep things accessible for less capable engineers and to yell at AWS when Cloudformation breaks. I think that’s a fair strategy, though my preferred strategy is to make sure your engineers are good and to not have to rely on third parties, a professional version of ‘git gud’.

The Good

I’m huge on visualisation - the most efficient method of parsing information for people is via sight. It’s so powerful people’s impressions of information varies depending on how beautifully it’s presented. It’s an easy win to make something pretty. Cloudformation is not exactly stunning, but it does come with a UI. I can send a link to a build to non-technical stakeholders and if it’s good the text is green, if it’s bad the text is red. Having a shared, visual UI beats a screenshot of a terminal every time.

Additionally, it’s nice to not have to set up or provision something in the backend to store state. Cloudformation comes with its backend built in which is basically cheating compared to the other tools here, but is still something that I don’t have to worry about.

The Bad & The Unusual

Unfortunately, it’s almost too janky to use outside of introductory use cases. Want to loop through some IP addresses? You’re splitting a string. When writing Cloudformation templates it feels like I should be writing for a templating tool like Mustache or Jinja and thus have the same templating capabilities. Cloudformation even refers to your manifests as templates. Yet Cloudformation has such a small amount of available templating functionality that I wanted to bust out jinny to do the actual templating. If I’m locked into Cloudformation in the future and I probably would do that to at least generate the end result.

Then there’s the operational aspects of Cloudformation. Deploying a fresh stack but you fat-fingered a property? The stack gets stuck in a ROLLBACK_COMPLETE state and you need to delete the whole stack to continue. Deleting whole stacks of infrastructure is not something I want to get used to, but it’s something that has to occur if you get caught in various AWS landmines. There’s not the template validation that you would expect either, so silly mistakes cost you substantial amounts of time.

Cloudformation can only handle 200 resources per stack. It’s also hard limited to directly provided AWS resources only. You’re not provisioning your EC2 machines or populating your RDS databases with Cloudformation - you need something else to actually deploy to your AWS infrastructure outside of introductory things like user-data. If you’re playing in the big leagues you’re going to need additional tooling. Some AWS services are just not supported at all in Cloudformation and support for new services has been late.

Cloudformation is technically ran on the AWS side, ie, the computation and connections requisitioning your cloud infrastructure happens on AWS servers. This means that secrets, objects, credentials and other data cannot be accessed if it isn’t accessible to AWS’ public servers. For handling sensitive things like passwords to downstream systems this becomes a problem. I’ve not searched in depth, but I couldn’t find an obvious method to implement secrets inside of Cloudformation templates the same way you can in Terraform with encrypted secrets or in Ansible with Ansible Vault commands. The solution I found was to create the secrets manually as AWS Secrets Manager Secrets that are then referenced by whatever services you’re managing through Cloudformation.

Additionally, with the computation taking place on AWS’ side, you make a call to Cloudformation that then sends it’s own calls to other AWS services to manage your infrastructure. If something goes wrong then you’re enduring a rollback in reverse through the same proxied process. Substantial stacks can take 20 mins to deploy, fail and rollback. For clunkier services such as Cloudfront with poor error responses, long load times and no checks, this time really does add up.

Cloudformation does what it does and it’s built the way it’s built. There’s not much that can be fixed without a complete recreation, hence if you’re using Cloudformation you’re either new to it or have accepted it for what it is, which is the vendor provided solution. That brings it’s own non-technical issues that are worth mentioning.

I find that the worst element of Cloudformation is that there’s an uphill struggle to have technical reasoning against using Cloudformation register with less technical stakeholders. From their point of view, Cloudformation is an AWS tool and AWS made the cloud, therefore Cloudformation can’t possibly have faults or issues. Even when you provide the direct evidence of GitHub issues and statements from AWS, stakeholders with this attitude are not to be reasoned with and instead Cloudformation’s failures are your fault. This problem isn’t AWS’ doing, however, if you’re in a hostile environment it’s best to just forge ahead with something that doesn’t have the AWS baggage attached to it, otherwise you can’t have sensible conversations. Chances are you may well need an additional solution anyway - if your application utilises a lot of S3 hosted files then you’re going to need to manage that with some other tooling as discussed above.

As part of the shared ownership model the customer more or less takes responsibility for anything that isn’t literal physical machines. That’s a completely reasonable and fair model for splitting responsibilities between Cloud provider and customer. Cloudformation has a right to fail and you have the responsibility to accept that as part of your tool selection. I’ve have worked with both AWS Professional Services and with premium support and in my experience if there’s something wrong with a service it gets kicked back to the service’s team to fix. Just because Cloudformation has an AWS badge doesn’t make it bulletproof and I don’t expect it to have any more reliability than the other options below. So why take on that baggage and accept being further abstracted away from the action?

Verdict

Cloudformation is a weird tool. I like having the state managed inside the cloud provider with an attached UI. The UI performs the same function as an account billings page where I can cancel forgotten subscriptions. In my mind Cloudformation works for simple, stagnant infrastructure that needs no maintenance such as:

- VPCs/Subnets/Route Tables

- Internet/Private Gateways and NATs

- Common Security Groups

- IAM Users

Anything with any sort of continuous changes such as Auto Scaling Groups, Lambdas and application maintenance in general, I wouldn’t want to manage long term with Cloudformation. Neither would I bother with anything cutting edge or even slightly exotic. Even Lambdas push the boundaries as you still need to separately update, package and upload your code from outside of Cloudformation, meaning that you need other tooling to support the elements that Cloudformation can’t support.

Overall, I think I’d manage a VPC via Cloudformation rather than in Terraform, but that’s about it. Sure, I can’t dynamically loop through subnets, NAT Gateways, route tables and the like, however, VPCs have standard patterns and a bit of copy pasting gets me a UI and no statefile to manage.

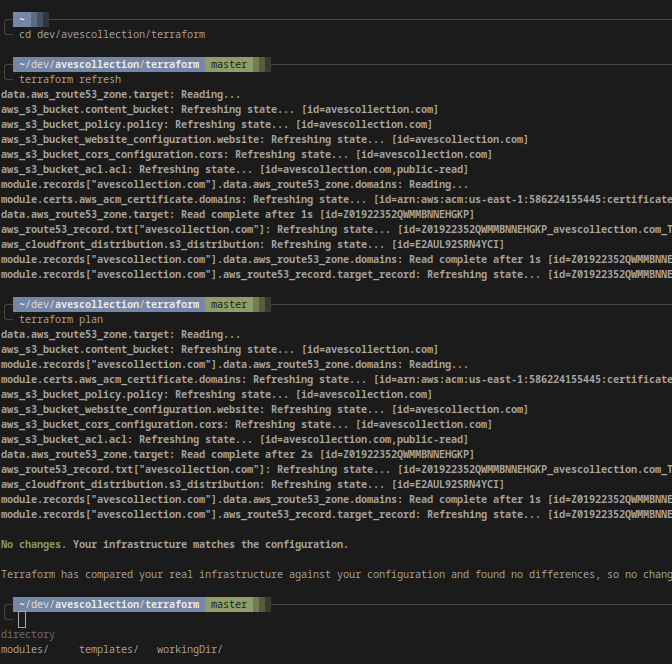

Terraform ¶

Terraform is the cloud tool that I’ve spent the most amount of time with. It has both ‘resource’ things and ‘data’ things meaning that it can both ‘create’ and ‘find out’. It supports pretty much every provider on the planet from the obvious cloud vendors to SaaS APIs such as MongoDB Atlas or non-SaaS APIs like Dominos Pizza. It can also work with multiple providers in the same set of code. I’ve handled two cloud providers across multiple regions and managed Kubernetes clusters in the same deployment. You can manage pretty much everything in the same code base.

The Good

Hashicorp’s Terraform works with all the cloud providers, so once you know and understand it you can retain most of your knowledge as you bounce around various environments, teams or clients. Terraform can also manage quite a lot of resources although I found it began to crap frequently as we approached 1.5k resources.

Most technical staff have heard of it, it’s fully open source, most of the stuff Hashicorp does makes sense, the documentation is actually pretty good. I have no core objections to Terraform usage in most use cases.

terraform destroy is a wonderful command most of the time. Being able to nuke your infrastructure from existence is great, although I only find myself running destroy commands when testing Terraform deployments. It also doesn’t always work.

The Ugly

Terraform occasionally shits the bed. It occasionally corrupts your data, nukes the wrong thing, hangs, complains about completely irrelevant things and chucks the odd tantrum. It’s occasional, but it happens and grows more frequent as it controls more complex stacks. You want to split your Terraform early and often to avoid it getting out of control.

I’ve also found that Terraform conflicts with usage in teams or in pipelines. In these environments only one application of Terraform can happen at a time - when initialising a deployment Terraform will lock the state so until that state is released your pipelines and colleagues sit around waiting. Quite often Terraform will fail to release the lock, so much so I usually include a bash script to quickly delete the lock from the chosen backend.

The state file is also worth mentioning. Everything Terraform does gets dumped into a single JSON file that is the “statefile”. The statefile holds literally everything managed by that deployment, so expect to eventually come across 20k LOC statefiles. If you ever need to fix or replace a statefile, you’re in for a bad time.

The Bad

Hashicorp releases have broken Terraform. Sometimes it’s deliberate breaking between major versions, which is understandable but still a burden you’re forced to deal with. Other times they’ve completely broken deployments. Some features will never come such as variablizing backend configuration, meaning that you’re always going to have to hard code something or manage it outside of Terraform.

There’s other elements that I can’t get my head around either. Migrating from version 0.11.x to 0.12.x broke resources whose name started with a digit. That’s not a number by the way, that’s the string version of a digit, ie a string. Sure, an intended change, however at the time it wasn’t in the migration guide so blew a load of errors. Having a handful of “Hashicorp did something” moments is worth acknowledging.

In my opinion, the biggest flaw is Hashicorp Configuration Language (HCL). I cannot think of HCL as actual code. Its loops are clunky with both for_each and count feeling like cheat codes rather than control statements. Conditional statements are usually ternary statements like:

var.existing_variable ? "The variable exists" : "The variable does not exist"

I occasionally see map lookup function calls providing a map, an index to search for and a default value if that index does not exist:

lookup({Frank="Burger", Pete="Hot Wings"}, "Steve", "No order for 'Steve'")

That works but it scales horribly. At the same time HCL allows for list and object comprehensions creating lists and objects from lambda style expressions in a single line. The following code makes a new list of capitalised strings from a different list of strings but only if the string ends with ‘Teeth’:

$ cat test.tf

locals {

collectionOfWeirdThings = [

"pandaTeeth",

"lionTeeth",

"myOwnTeeth",

"aPetRock"

]

# The following one line can process millions of things...

myFavouriteWeirdThings = [for thing in local.collectionOfWeirdThings : upper(thing) if endswith(thing, "Teeth")]

}

output "my_favs" {

value = local.myFavouriteWeirdThings

}

$ terraform plan

Changes to Outputs:

+ my_favs = [

+ "PANDATEETH",

+ "LIONTEETH",

+ "MYOWNTEETH",

]

When dealing with complex infrastructure you end up fighting HCL to express simple logic or easily falling down a rabbit hole to a nested hellscape.

Each of the above issues are individually annoying, but when you encounter them all at once it becomes horrendous. Unfortunately, less capable developers often end up piecing together monstrosities where they’re running multiple lookup function calls on nested maps tied to critical production infrastructure managed by version 0.11.x. In those scenarios the best option to rip it out and rebuild anew before the underlying provider APIs move out from under you. Terraform “codebases” are the number one “bin and recreate” codebases I’ve come across.

It’s also for this reason that I don’t use Terragrunt. Terragrunt is a wrapper around Terraform that splits out your Terraform based on the directory structure that you create. It’s possibly an improvement once you get used to the rigid structure, however, it still uses HCL. So now you have Terraform and Terragrunt to keep happy and HCL². I can’t fathom what benefit I could get by introducing more HCL so I avoid Terragrunt altogether.

Verdict

Terraform is a good tool. It’s a damn good tool. I know its issues as I’ve used it to build massive, complex, production grade infrastructure quickly and in hostile environments. It can get out of hand, but if you know how to handle it it’s still a reliable option. A huge strength is the sheer number of providers and levels that Terraform works at. If you’re working on cross cloud, Kubernetes, specialist providers like Backblaze, Terraform will save you a lot of headaches.

As a real life example, I took a ForgeRock deployment working across multiple accounts and Kubernetes clusters and deployed the machines, VPC Service Endpoint and the full Kubernetes ForgeRock manifests in one Terraform deployment. This replaced about three Helm charts, a bunch of manually added manifests (ie, K8s storageclasses) and relying on a third party infrastructure team who had grown to hate ForgeRock dedicated infrastructure. There’s not many tools that can do all that.

Pulumi ¶

I liked using Pulumi. I’ve used it for rolling out small projects using the Python implementation and haven’t had any issues with it. As it’s all managed within your interpreter you have the full flexibility of whatever language you’re working with to do some really impressive things. It’s a blue sky thinking tool that I can see a great future ahead. There’s just two things I cannot get over.

Personal Preference

Generated names.As I mentioned for Cloudformation, visualisation matters. Spamming random characters all over resource names messes with my concept of organisation. Most cloud resources have unique IDs or the name itself is the unique ID, hence I cannot think of a solid reason as to why random string suffixes are a process in Pulumi. No other infrastructure management tool slaps random strings at the end of resources and makes you jump through hoops to disable it. I’m not sure what I lose by dropping the random name but I’m not keen to find out.

Secondly, I have absolutely no desire to “log in” with my CLI tools. My CLI handles all sorts of sensitive and critical data and access. The last thing I want to have blocking a deployment is to have to authenticate with a third party’s service. I don’t care if there are ways around it, I can’t bring myself to trust it.

There are going to be use cases for Pulumi that do not fit into other tools as well. I can imagine that if you had a set of generated infrastructure per client that needed a dynamic manual created detailing special endpoints then Pulumi would do an amazing job of that. I haven’t come across those sort of use cases so I personally don’t have much desire to use Pulumi for now.

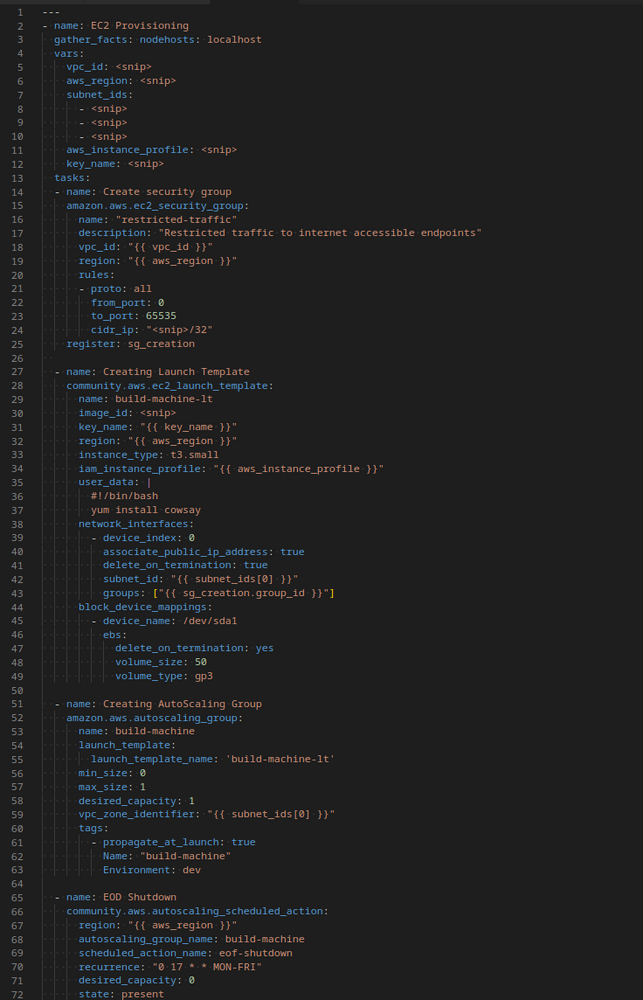

Ansible ¶

This was an unexpected blast from the past, but Ansible works with a number of the cloud providers. I used to use Ansible a long time ago for minor jobs but didn’t get too involved with the tool until I needed to manage multiple on-premises machines. Then I tried Ansible for some AWS provisioning and found myself loving it.

The Not So Great

Some of the ansible modules are community based and a few others are official, at least in AWS land. Ansible isn’t as well supported for cloud providers as say Terraform, though most resources are available as downloadable collections.

Also Ansible has a weird way of managing variables, they need to be Jinja variablized into your playbook. That means that a variable like this_variable needs to be stringified and then templated like so:

- name: A debug task with a variable

debug:

msg: "{{ this_variable }}"

For strings it’s straight forward but it seems odd to be quoting lists and dicts. Reserve a few brain cells for this knowledge. Also the output for Ansible is often ugly. You have to jump through some hoops to make it look good.

Deleting infrastructure is also relatively clunky compared a terraform destroy command - usually you’re going through your playbook and stating each resource as absent. That’s not the worst experience but it’s not the best. However, the roll through of resource deletion is the complete opposite workflow due to the dependencies. For managing Kubernetes resources which are logical, sure you can delete most resources whilst they’re still in “use” by other resources such as services, configmaps, secrets, storageclasses, etc.

For most cloud infrastructure that isn’t the case. This is a pain in the ass, but then I have to face up to the reality that I’m just not deleting that sort of infrastructure that often. It’s probably worth logging into the console for those sort of events so you can makes sure to type ‘delete me’ into the confirmation box.

Major issue however is the patchy support. AWS does have official modules, but they don’t support resources such as RDS serverless. Why? Don’t know. As there’s an official set of AWS modules it’s unlikely anyone is going to put the work in on AWS’ behalf so cases like that are probably lost causes.

The Good

There’s no agents or strange abstracted elements like .terraform directories or 20 different pulumi binaries. There’s no additional setup like backends or pulumi login.

Ansible is built to be idempotent. Two people can run the same playbook at the same time, at different times, one after the other, on different machines, whatever, both users will have the same end result.

Ansible works with cloud APIs, local machines, remote machines, Kubernetes, Vagrant, and with a load of other things.

Ansible doesn’t mentally stretch itself by trying to build dependency trees or attempting impossible rollbacks. It just works through a set list of tasks, checks if the task has been done and if it hasn’t, Ansible does it. That is exactly what I would do if I were manually provisioning cloud infrastructure.

Ansible is an automation tool. It automates the stuff that I would do rather than try to think for itself. After many years wrestling with tools, I think all I ever wanted from them was simple automation. Simple is far easier to fix, debug and work with than easy.

Even where no one in the organisation uses Ansible, I can use it myself to automate procedures that haven’t been captured by other tools. Ansible is non-exclusive and isn’t treading on other people’s toes - it feels like sneaking my dog into work. No one knows he’s here with me, but in the background I’m stripping all the 0.0.0.0/0 security group rules from every security group across 5 accounts without locking statefiles.